High energy consumption is a rising problem in the world of Artificial Intelligence. The current cutting-edge algorithms need a very potent server space for development and usage. AI models such as ChatGPT need huge amounts of data for training and consist of billions of parameters. Therefore, they consume vast server space and, in consequence, energy.

As believed by many experts, we can solve this problem from two different angles:

- Find alternative ways for energy production so that we can keep increasing the usage of our silicon-based servers. Top alternatives include fusion, geothermal, and nuclear energy, however these energy sources are either very immature or they cause significant public concerns regarding safety.

- Unconventional computing, which is a field exploring new ways of computing, both for hardware and software.

We believe that unconventional computing is the best way to reduce CO2 emissions. This creates an opportunity not only to reduce energy consumption but also to develop better AI systems.

Unconventional Computing

We can consider digital computing on silicon-based hardware as conventional computing. In this type of computing, electrons flow between logic gates which encode and transform information.

On the other hand, we have unconventional computing, with new types of hardware and software.

There are different perspectives from which we can think about unconventional computing of information. On a general level, we can consider computations:

- on physical objects (apples, billiard balls, or anything else that is countable);

- on complex nonlinear systems, for example water;

- as interactions (human-machine, between humans, between machines).

We can also look at new ways of computing from different fields of basic science, such as physics, chemistry, biochemistry, and biology. This allows building new computational systems, where the units of information could be any particles, waves, chemical substances, and even living cells. A few interesting examples include quantum computing, reservoir computing, and neuromorphic computing.

One of the widely discussed unconventional computing methods is quantum computing, for which electrons and photons are used to process information. Quantum computing is at the R&D level, and the most promising application for it seems to be cryptography. It is challenging to create reliable hardware for quantum computing, —though a major milestone was reached when Google’s Sycamore processor performed a complex sampling task in 200 seconds that would take a classical supercomputer 10,000 years, demonstrating quantum supremacy. It is hard to estimate the energy efficiency of quantum computers at this stage of the development. While current quantum computers are energy-intensive due to high energy consumption for cooling and control of the hardware. However, we can assume that future fault-tolerant systems could offer massive energy efficiency gains for specific problems by solving them exponentially faster than classical machines.

Another interesting field is reservoir computing, where information is processed by a fixed system, which can be digital or physical. The main difference between standard machine learning and reservoir computing is that we do not aim to change internal parameters (such as weights in neural nets), we aim only to map temporal information into the system, to get reliable input-output relationship. This means that reservoir computers do not learn, we only learn to observe their responses. The advantage of reservoir computing is that it uses less computational power, as compared to digital computing, and that even a physical object can be a reservoir. An interesting example of physical reservoir computing is a water bucket, which is not a network but continuous system. Water can be perturbed on the surface and these perturbations cause characteristic response. Another special case of reservoir computing is Liquid State Machine, which is a digital system where we can input patterns which are encoded in both space and time (using spiking neural networks inspired by neuroscience).Reservoir computing is energy efficient for specific applications.

Unconventional Computing and Neuroscience

When discussing neuroscience’s contribution to unconventional computing, neuromorphic computing is essential to mention. It is a branch inspired by the human brain, where information is encoded in space and time, rather than in binary 0s and 1s like in traditional digital systems. The most popular model in neuromorphic computing is the Spiking Neural Networks (SNNs), which encodes information in time-dependent manner. One of the key objectives of developing neuromorphic computing is to perform computations at lower energy cost as compared to traditional digital systems. Brain is very energy efficient because neurons spike only when they have meaningful information to process, in contrary to digital systems, which process every piece of information with the same effort, leading to huge energy consumption. Another characteristic of brain which are often mimicked by neuromorphic technologies is in memory computing (no distinction between memory and computing space, which also saves energy consumption) and low latency.

We can simulate SNNs using digital hardware (for example snnTorch library), this is rather done for research purpose. To achieve true energy savings, we need to customise the hardware. Current GPU chips used for digital computing are optimised for large matrix operations on 0s and 1s encoded data. We can customise hardware for SNN by building digital chips which architecture is inspired with human brain (for example, IBM True North, Intel’s Loihi or Spinnaker) or we can go even further and build analog hardware which can encode information in time dependent manner (for example Dynapse, Neurogrid).

Looking at neuromorphic field we can see that developing computational methods inspired by human brain could bring significant savings of energy needed for computing. Scientists are still looking for more suitable materials which can process data in a similar way as a human brain. We can even consider applying the living neurons themselves as an extension to this approach.

Biological Computing

The human brain is the best-known device for processing of complex information. What if we can use building blocks of the human brain, neurons, for computation? This is a new kind of unconventional computing, biological computing (also called biocomputing / wetware computing / organoid intelligence). This is a field in which we create new hardware and develop new algorithms suitable for this hardware.

Interestingly, we can see a following trend in computer history: we started with analog computers, which were later replaced by digital computers and now we may come back to analog computing through unconventional computing. Most of the methods of unconventional computing described above are analog.

Energy efficiency of biological computing

One of the biggest advantages of biological computing is that neurons compute information with much less energy than digital computers. It is estimated that living neurons can use over 1 million times less energy than the current digital processors we use. When we compare them with the best computers currently in the world, such as Hewlett Packard Enterprise Frontier, we can see that for approximately the same performance (estimated 1 exaFlop), the human brain uses 10-20 W, as compared to the computer using 21 MW.

This is one of the reasons why using living neurons for computations is such a compelling opportunity. Apart from possible improvements in AI model generalization, we could also reduce greenhouse emissions without sacrificing technological progress.

What are the challenges?

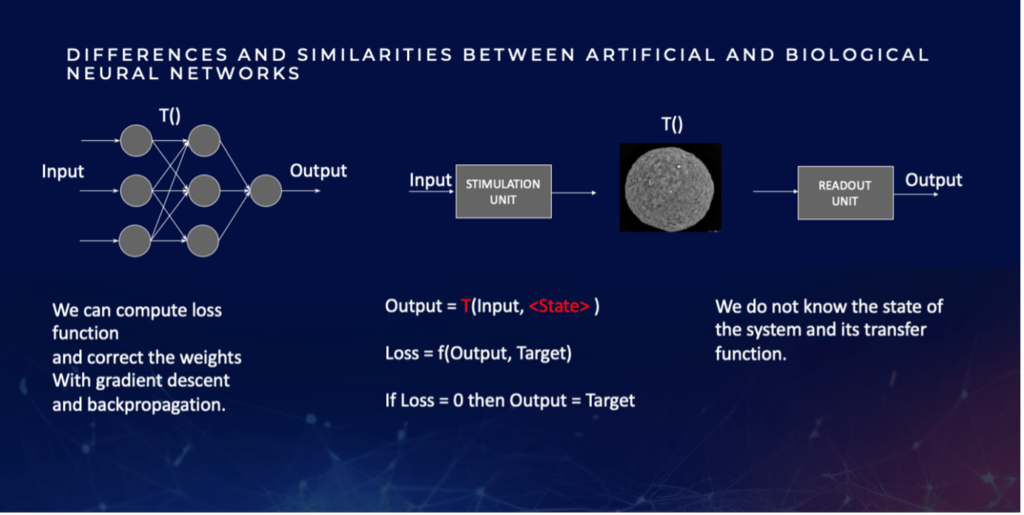

Of course, using living neurons to build new generation bioprocessors is not an easy task. Despite all the advantages, such as energy efficiency, relatively easy manufacturing, and proven ability to process information, bioprocessors from living neurons are hard to develop. The main reason for this is the fact that we do not know the state of the system in the structures built from living neurons. Digital technologies are very easy to control, and all their parameters can be read and modified. We know which information we input, what is the transfer function and its parameters, and we can see the difference between the output and target answer. All this data in machine learning allows us to adjust the learning algorithm with such methods as gradient descent and backpropagation.

When we work with living neurons, we can observe relationships between the data we input to neurons and their response, but it is very difficult to measure what is going inside the neuronal structure. In technical terms, we do not know the transfer function and the state of the system. This is a real black box, much harder to understand than the ‘black box’ in artificial neural networks. This means that we need a lot of experiments to develop the algorithms for computations on living neurons. Since we cannot control the internal state of the system, we need to apply a lot of trial and error in order to develop good methods to communicate with neurons in vitro.

This is why at FinalSpark we have built a 24/7 platform allowing remote automated experiments on living neurons. This allows us to run very long experiments, for hours, or even weeks.

We also modulate neuronal learning with neurotransmitters. As in the brain, learning is modulated by dopamine, serotonin, adrenaline and many other neurotransmitters, and small molecules.

We believe that neurotransmitters can help us to better control the behaviour of the neurons and therefore accelerate our development of stimulation algorithms.

This picture illustrates the main similarities and differences between artificial neural networks and biological neural networks. In both cases we input the data and receive the output which we can compare to our target (expected answer). In artificial neural networks we know exactly how the information is processed at each step, we know the state of the processing system and which transfer function is transforming the input to output. In contrast, in biological computing, we do not know the state of the system and its transfer function. An additional difficulty lies in the fact that the system is dynamic, therefore its state and its transfer function change over time. This causes changes in the relationships between input and output. In consequence, the experiments may not be reproducible over a long period of time. Therefore, the same input can cause different output at different time points. This means that we need to analyse time series of the experiments, to see if we can see any patterns evolving over time. We also look a lot at global changes in the activity of our living bioprocessors, as data from individual electrodes can be too noisy. This is similar to the human brain, as it also processes very noisy information, which makes sense only on a statistical level.

FinalSpark

To accelerate the development of biological computing, FinalSpark has developed a remote platform connected to our lab. This is the largest wetware computing open innovation platform in the world. The FinalSpark team together with scientists from universities worldwide run experiments with the main objective: how to develop the language to speak with living neurons in vitro? This language is currently mainly based on electrical stimulations, but we are also performing tests with chemical stimulations, such as rewarding learning progress with dopamine.

Follow us for the updates on our development or get in touch if you are interested in doing a cutting-edge research project with us!